Detecting AI-generated text: Are we looking at an infinity mirror of illusive control?

Since the launch of ChatGPT by Open AI in November of 2022, a turmoil of questions, issues, and challenges has taken place in the teaching community. In particular, it is related to the assessment that often relies on text-based assignments in opposition to hands-on or performance-based demonstrations. When learner assessment relies exclusively on written reports and text-based performance, the question of authorship, plagiarism, and academic dishonesty became issues at the center of AI-generated text tools discussion, especially in higher education.

Historically, the response to these challenges has been some form of policing or control. Just a couple of years ago, when most of the teaching and learning had to pivot to online and virtual formats as a result of the COVID-19 pandemic, instructors came up with policing and controlling approaches for the exact same reasons. To ensure that learners “don’t cheat” on the assessments that were given to them. Such created a myriad of unintended consequences like exacerbating social inequalities, invading private learning spaces, and bringing to life Orwell’s 1984 “Big Brother is Watching You” forewarning.

Dall E generated image. Curated by Ana-Paula Correia.

Paredes et al.’s (2021) research on remote proctoring of exams highlights that although the method was implemented to curb academic dishonesty, it inadvertently led to feelings of intrusion and heightened anxiety among students, thereby undermining the original purpose. Students attributed this to the feeling of being under surveillance rather than being driven by internal motivation or a personal process of reflection to be academically honest.

Is history repeating itself with AI-generated text detectors?

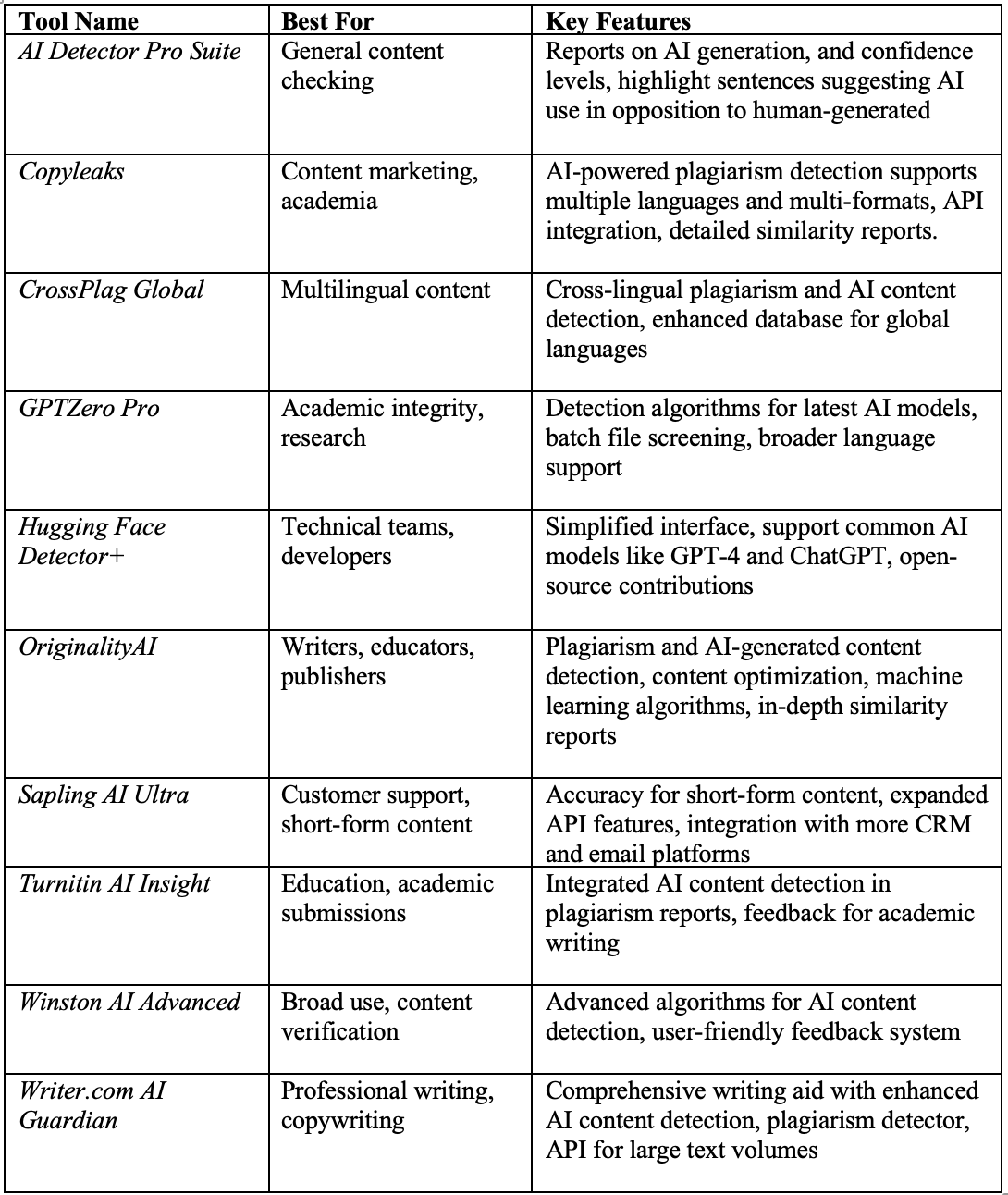

Let us start by examining AI content detector tools available in the market as of January 2024. The table below provides a potential comparison of AI content detector tools in terms of their target audience and key features.

Interestingly, on January 31, 2023, OpenAI announced on its blog the launching of a new AI classifier for marking up AI-written text. However, it was discontinued on July 20, 2023. Open AI reported that “the AI classifier is no longer available due to its low rate of accuracy. We are working to incorporate feedback and are currently researching more effective provenance techniques for text and have made a commitment to develop and deploy mechanisms that enable users to understand if audio or visual content is AI-generated" (OpenAI, 2023, para 1).

AI content detector tools, their target audience, and key features (January 2024).

AI content detector tools offer a range of features, from plagiarism detection to detecting AI-generated content, with varying degrees of reliability and pricing plans. They cater to different needs, from academic research to content marketing, making them useful for a wide array of users. However, AI content detector tools report many limitations, including the misidentification of human text as AI-written. They highlight human text as AI-written, primarily focused on the English language, and tend to be expensive with limited free plans. Most of these tools are subscription-based with premium options.

Are AI-generated text detectors really efficient? Are they living up to their promises? Or are we creating an infinity mirror where the illusion of control is fed into a never-ending tunnel?

Since all of these AI content detector tools detectors operate on likelihood, they are unable to offer conclusive evidence that content is generated by AI. That means that AI detectors analyze text and make determinations based on statistical probabilities rather than absolute certainties. Essentially, these tools assess various factors and patterns within the text to estimate the chance that it was written by an AI system. They use algorithms that have been trained on datasets of known AI-generated and human-written content to identify markers or characteristics typical of AI writing.

For instance, they might look at the complexity of the vocabulary, the variability in sentence structure, the flow of ideas, or other linguistic features that AI models commonly exhibit. Based on these data, the detectors then assign a probability score indicating how likely it is that the text was AI-generated. However, since these are probabilities and not definitive indicators, there is always a margin of error, and the results are not 100% guaranteed to be correct. There may be instances where AI-generated text appears very human-like and vice versa, which can lead to false positives (wrongly identifying human text as AI-generated) or false negatives (failing to identify AI-generated text).

Similarly, the research conducted by Mills (2023) investigated the efficiency of AI detection tools, notably GPTZero and Turnitin, in pinpointing content crafted by AI language models. The researchers proposed that a dependable AI detection tool ought to identify AI-written content with about 99% accuracy, which they consider a crucial benchmark for educators to determine instances of academic dishonesty consistently and accurately. The results revealed a complex reality, with detection success rates varying widely from 0% to 100% among different essays and tools. The study brought into focus the concepts of "false negatives" and "false positives" as outcomes of the detection process, emphasizing the challenge of consistently detecting AI-generated content and the imperative for ongoing research and enhancement of AI detection technologies.

So far, initially hailed as the ultimate defense for academic institutions against the proliferation of automated writing, the efficacy of AI-generated content detectors has since been called into question. These tools demonstrate a lack of precision in accurately pinpointing instances of academic misconduct—failing to identify actual cases of cheating while paradoxically flagging false positives (Roberts, 2023).

Dall E generated image. Curated by Ana-Paula Correia.

An infinity mirror creates an endless series of diminishing reflections by using two or more mirrors set up to bounce light between them. Likewise, as we race to uncover AI-generated text to find if our students engaged in what could be called "cheating,” we run into the risk of gazing into an analogous reflective loop. This pursuit risks making inconclusive or unreliable judgments based solely on these repetitive reflections. Moreover, it dangers neglecting the subtle expressions of creativity, reinforcing biases inherent in the training data, and missing the contextual nuances essential for interpreting the content accurately.

So, what do we do?

As AI technology evolves, so do the methods for detecting AI-generated content. This means that the effectiveness of these tools can vary over time. Tools that are effective against current models may need updates to detect content generated by newer, more advanced models.

There are ethical considerations regarding the use of AI-generated content and its detection. For instance, in academia, the use of AI-generated content without disclosure raises questions of authenticity and integrity. In practice, relying solely on these tools for critical decisions (like academic grading or academic integrity) without human oversight might create new problems like overlooking nuanced expressions of creativity, perpetuating biases present in the training data and system design, and failing to capture the context that guides the interpretation of the content. Furthermore, false positives may result in baseless allegations of wrongdoing, whereas false negatives could let breaches of academic honesty go unnoticed.

As Weber-Wulff et al.’s (2023) research indicates, there is no simple method for identifying AI-generated text. Using AI content detector tools may be inherently unfeasible, at least for the time being. Consequently, educators should concentrate on preventive actions and reconsider approaches to assessment rather than on detection methods. For example, assessments should evaluate the progression of students' skills development instead of just the end result.

We argue that AI-generated content detectors are best used in conjunction with human expertise and other methods of analysis to ensure the highest levels of accuracy and integrity in content validation and, concomitantly, create assignments as opportunities for application and practice (e.g., project-based assessment) where students cannot rely exclusively on AI-generated texts.

As Williams (2023) explains, the emergence of companies and start-ups that are developing tools aimed at countering the application of artificial intelligence is growing with a promise of potentially profitable margins. However, such raises a critical question: while these tools are designed to address the challenges posed by AI in written assessments, are there unintended consequences that might introduce new issues that outweigh the benefits they are intended to provide? Only time will provide the answers.

References

Mills, J. (2023, December 5). Case study on the capabilities of AI Generative text detection. Symposium on AI Opportunities and Challenges hosted by Mid-Sweden University and the University of Gävle, Sweden, in conjunction with Academic Conferences International.

OpenAI (2023, January 31). New AI classifier for indicating AI-written text. OpenAI Blog. https://openai.com/blog/new-ai-classifier-for-indicating-ai-written-text

Orwell, G. (1977). 1984. Harcourt Brace Jovanovich (first edition published in 1949).

Paredes, S. G., Jasso Peña, F. J., & La Fuente Alcazar, J. M. (2021). Remote proctored exams: Integrity assurance in online education? Distance Education, 42(2), 200-218. https://doi.org/10.1080/01587919.2021.1910495

Roberts, M. (2023, December 12). AI is forcing teachers to confront an existential question. The Washington Post. https://www.washingtonpost.com/opinions/2023/12/12/ai-chatgpt-universities-learning/

Weber-Wulff, D., Anohina-Naumeca, A., Bjelobaba, S. et al. (2023). Testing of detection tools for AI-generated text. International Journal for Educational Integrity, 19(26). https://doi.org/10.1007/s40979-023-00146-z

Williams, T. (2023, February 6). Inside the post-ChatGPT scramble to create AI essay detectors. The Times Higher Education. https://www.timeshighereducation.com/depth/inside-post-chatgpt-scramble-create-ai-essay-detectors

-- Please cite the content of this blog: Correia, A.-P. (2024, February 20). Detecting AI-generated text: Are we looking at an infinity mirror of illusive control? Ana-Paula Correia’s Blog. https://www.ana-paulacorreia.com/anapaula-correias-blog/2024/2/10/detecting-ai-generated-text-are-we-looking-at-an-infinity-mirror-of-illusive-control